Lately I installed my first DAG with Exchange 2016 CU3 in productive environment. In the beginning it went really smoothly. But after all databases have been created, I realized that databases got bounced around the nodes and a lot of errors in the Event Log like these:

Log Name: Application

Source: MSExchangeIS

Date: 12/14/2016 9:53:07 AM

Event ID: 1001

Task Category: General

Level: Error

Keywords: Classic

User: N/A

Computer: FABEX01.fabrikam.local

Description:

Microsoft Exchange Server Information Store has encountered an internal logic error. Internal error text is (ProcessId perf counter does not match actual process id.) with a call stack of ( at Microsoft.Exchange.Server.Storage.Common.ErrorHelper.AssertRetail(Boolean assertCondition, String message)

at Microsoft.Exchange.Server.Storage.Common.Globals.AssertRetail(Boolean assertCondition, String message)

at

The store worker processes kept constantly crashing.

Symptoms

The servers were just fresh installed with no mailbox on it. The layout is as follows:

- 4 node DAG

- Windows Server 2012 R2 as Operating System

- Exchange 2016 CU3

- 72 databases in total:

- 4 copies each (2 passive and one lagged)

- 4 databases per volume

- 18 active per server in normal operation

As I have never seen a behaviour like this before, I opened a PSS case and started digging into the issue in parallel. After a while, I was able to see a pattern.

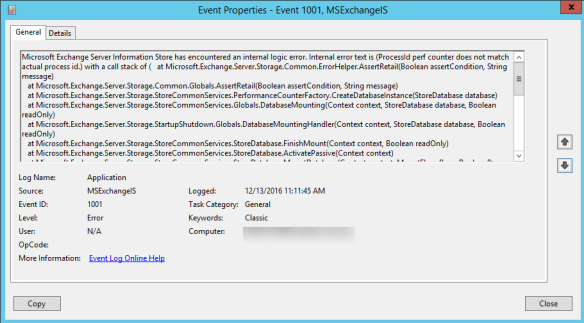

First the store encountered an internal error

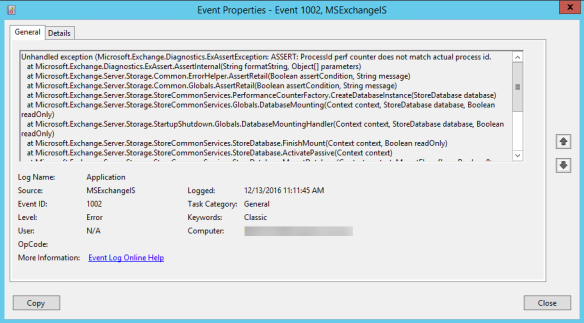

followed by an unhandled exception

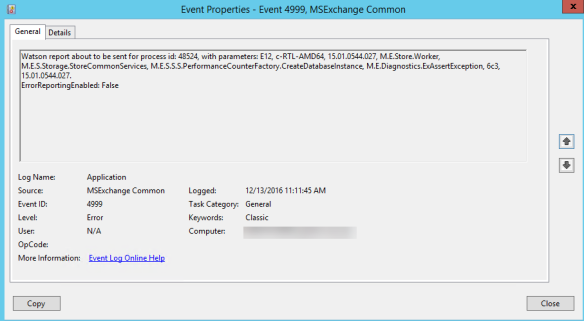

which ended in a Watson report

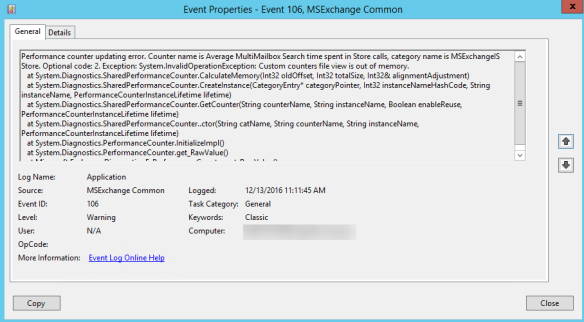

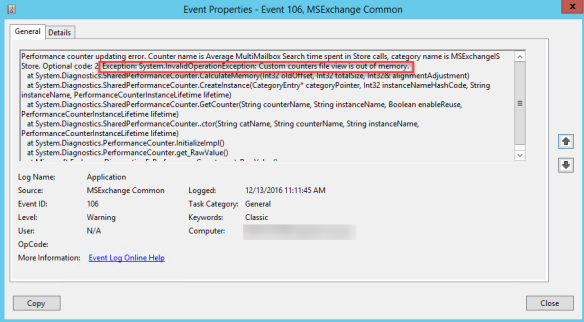

After the Watson report the logs were flooded by performance counter warnings

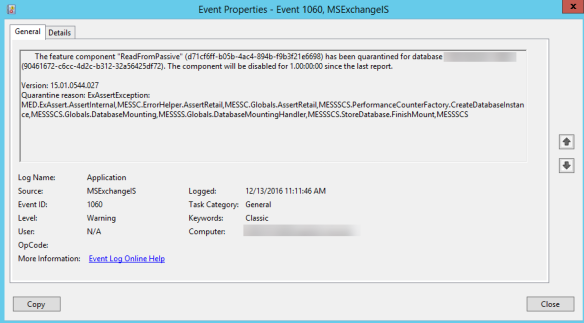

This ended all in having the feature “ReadFromPassive” quarantined

Upgrade to Exchange CU4

As we couldn’t figure out what’s going on and our EE also had no hints, we decided to upgrade to Exchange 2016 CU4. We were hoping that a potential issue was already fixed in the latest version. Sadly this did not fix our issue.

Root Cause

After a while we finally found the root cause for the unhandled exception, which caused the store worker processes to crash. You need to have a closer look at a previous shown event:

Performance counter updating error. Counter name is Average MultiMailbox Search time spent in FullTextIndex, category name is MSExchangeIS Store. Optional code: 2. Exception: System.InvalidOperationException: Custom counters file view is out of memory.

at System.Diagnostics.SharedPerformanceCounter.CalculateMemory(Int32 oldOffset, Int32 totalSize, Int32& alignmentAdjustment)

Various performance counter could not be initialized as the allocated memory was not enough, which caused the store worker processes to crash.

Global Shared Memory

You can read more about how FileMappingSize works here, but the main statement is the following:

“Memory in the global shared memory cannot be released by individual performance counters, so eventually global shared memory is exhausted if a large number of performance counter instances with different names are created.”

Resolution

Now that we’ve found the root cause we need to fix it by increasing the memory for the affected performance counters. There is no way, at least we couldn’t find one, to calculate the needed value. It just depends on your environment. In my environment the following needed to be tweaked:

- MSExchangeISStorePerfCounters

- PhysicalAccessPerfCounters

As there are several methodes to do so, I listed those:

Methode 1:

Like Exchange is doing this during setup. For this you need to load the PSSnapin Microsoft.Exchange.Management.PowerShell.Setup:

Add-PSSnapin -Name Microsoft.Exchange.Management.PowerShell.Setup New-PerfCounters -DefinitionFileName MSExchangeISStorePerfCounters.xml -FileMappingSize 10485760 New-PerfCounters -DefinitionFileName PhysicalAccessPerfCounters.xml -FileMappingSize 8388608

Methode 2:

Edit the Registry for the specific counters. A hint for this could be found in the previous mentioned article:

“For the size of separate shared memory, the DWORD FileMappingSize value in the registry key HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\<category name>\Performance is referenced first…”

Methode 3:

Edit the Machine.config file and increase the default value from 524288 to the desired one, like described here.

Note: This will increase the default value for ALL performance counters, which might cause other issues!

Conclusion

We decided to edit the Registry as we are using Desired State Configuration and this needed the least administrative effort.

We asked to have the setup changed to get for these performance counters increased as the setup is doing this already for some others. When you parse an ExchangeSetup log you will see that Exchange is already increasing memory for a few counters. Sometimes Registry is edited and sometimes PSSnapin is used. If you run into such an issue and you modified FileMappingSize, keep this in mind, when you try to solved EventID 106 like described in this KB:

I know that I might run into a corner case, but I hope this helps someone. Just in case…

How did you ascertain which values needed increasing? Was there a 106 eventid for each? Thanks for posting this!

LikeLike

Hi Chris, there was no value. I just compared what Exchange setup is setting for a few other perf counters and then did a try and error. There is no way to calculate the needed value (I had a MS case open for this…) and every landscape might need different values. These servers have 72 databases in total and 18 are active.

Ciao,

Ingo

LikeLike

Thanks for the reply Ingo.. Unfortunately, I have the 1001, 1002, and 4999, but not the 106 error with memory called out, so this is only a partial match for me. Did you have any additional logging enabled to see the 106 eventID?

LikeLike

Hi Chris, good question…I don’t remember exactly, but I would give it a try and increase loglevel for “MSExchange Common\General” and “MSExchange Common\Logging”. Which CU you’re running on? Ciao,

Ingo

LikeLike

Hello!

You’re a total lifesaver! Thank you so much! I’ve been banging my head against the wall for all day.

LikeLike

I’m glad the post helped!😜

LikeLike